Rise of Nuke in Compositing

Research Topics

This research project will look into:

- The history of compositing

- FIlm Case Studies

- Nukes appeal in its initial stage

- Film Case Studies Part 2

- What propelled nuke into the mainstream pipeline?

- Who is nukes most dangerous competitor?

- How is nuke keeping ahead of competitors?

- How is nuke preparing for the future of composting?

Early Composting

First use of Compositing

The first agreed upon use of composting was done by Georges Méliès in his short "Un homme de têtes" -1898. In this short he produced 3 heads that turned around and chattered on a table (figure 1), he accomplished this by blocking areas of the cameras sensor with black glass creating a portion of the film unexposed this allowed him to re shoot multiple times with him in multiple positions

figure 1. still taken from Georges Méliès, "Un homme de têtes" -1898.

Matte Painting

Filmmakers further improved upon this effect creating split stages for dramatic shots, later on Matte paintings a crucial part of composting was developed by Norman Dawn in 1905 for the film “Missions of California” the idea was that you would paint any structures you want in the background on a glass pane then film the shot though the glass like in figure 2. This is an important milestone as it was the first time somones was able to add a large scale background without needing to make an expensive and massive set

figure 2. A Matte Painting - Norman Dawn - 1905

Optical Printing, The First Render Layers

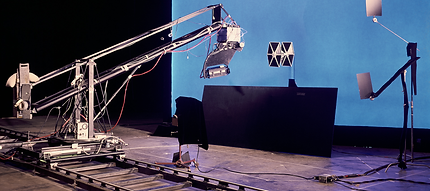

First introduced in 1918 the Optical printer had many iterations its purpose was to allow for two pieces of film to be printed onto of each other to make one. Its resurgence in popularity in 1977 was championed by its impressive use while making Star Wars, ILM made the first computer controlled camera rig, the Dykstraflex, that produced up to four reels at a time, these needed to be edited and printed together to make the final product this would set the president for composting up until 1985.

figure 3. Photo of the Dykstraflex at ILM

Digital Compositing

The first recorded use of Digital Composting was accomplished by ILM for the film Young Sherlock Holmes (1986) where they were able to create the first fully CGI asset and composite it into a film. This marked the beginning of digital compositing soon after Quantel released the first digital composting software Harry that was praised for its usefulness “You could go into a Harry session with a sow’s ear and come out with a silk purse,” -Richard Edlund

figure 4. Still taken from Young Sherlock Holmes

Shake

Shake was created in 1996 by Aranud Hervas and Allen Edwards and was widely used as the industry standard VFX compositing software before Nuke. Shake started off as a command line tool for VFX and became adopted by most studios in 1997. It gathered fame in the early 2000s being used on award-wining films like Lord of the Rings, Harry Potter, and The Dark Knight however in 2006 apple announced that in 3 years they would be discontinuing Shake due to Apple becoming more focused on selling phones. Finally in 2009 Apple removed the option to buy Shake and ended its reign over the VFX industry for good.

figure 5: Skake's Softwere Box

Development of Early Effects and Compositing

2001: A Special Effects Odyssey

ILM accredits the moment when studios realized SFX could be used convincingly to Stanly Kubrick's 2001 a Space Odessey "Kubrick's 2001 was the big special effects movie, but it was so big and expensive and awesome that it really didn't open up a lot of other possibilities [for effects] other than be an inspiration that effects could be done in a quality way." George Lucas.

2001 used miniature models that were designed to look as technically credible as possible even being outsourced to aerospace engineering companies

This was one of the first films to make extensive use of front projections to make studio shots feel like they were filmed outside as well as lots of rear projection for LED displays

Douglas Trumbull developed the Slit Scan Machine, which allowed the filming of two planes with exceptionally high and low levels of exposure to make this effect seen in Figure 6 they filmed serial landscape footage applied different coloured filters and interacting chemicals

figure 6: "Star Gate" Sequence

The Tiger, The Magician and the Morphing System

Willow (1988) was ILMs first test run of their CG department and in doing so they achieved an image-processing breakthrough. In the scene, Willow would set off a succession of metamorphosing effects turning a goat into an ostrich into a turtle into a tiger as seen in figure 7. To make this effect they collected plates of live animals and puppets then distorted the images on top of each other using a custom in-house software developed by Doug Smythe called the "Morphing System", this wasn't the only major development from this production. ILM software engineers also created a digital way to extract the blue screen backgrounds from shots allowing them to work on more shots quicker and quicker.

figure 7: Willow transforming scene

Indiana Jones and the First All Digital Composite

When working on Indiana Jones and the Last Crusade in the sequence ILM dubbed "Donovas's Destruction" where Donovan drinks from the holy grail and ages quickly before disintegrating into ash, while scanning in the shot ILM set out their plans "three motion-controlled puppet heads showing Donovan in advancing stages of decomposition would be filmed" this was done by creating wax molds and sucking them in around a skull to get the sunken hollowed out feel. they then used motion control and their Morphing System from Willow to finish the effect you can see in figure 8. instead of optically compositing the final shot ILM opted to scan the scene onto VistaVision film making it ILM's first ever all digital comp

figure 8: Donovan's Demise scene

Another Basement Another Matte Painting

The final shot of Die Hard 2 asked for slow pull out shot of a snowy runway filled with personnel and planes, the predicted cost of doing this shot practically was far too high so Benny Harlin decided on a matte painting however practically painting one and using rear projection would be impossible as the painting would have to be an estimated "30 feet long and 14 feet wide" - Bruce Walters, Instead they opted to attempt to digitally manipulate and composite the painting and live action something that had never been done before. To solve the massive processing power issue Walters came up with the idea that instead of painting at 100x resolution they paint four paintings at 4x resolution and blend between them as the camera zooms out. After the paintings were made ILM then decided to digitally composite live-action elements over the top such as planes, people, and vehicles. "the first time digital techniques had been used to blend a painting with live-action elements." - Cotta Vaz M

figure 9: Ending scene

Just a Simple Man Trying to Make VFX Invisible

Forest Gump is a treasure trove of invisible effects here, ILM really showed off their skills creating a huge amount of realistic VFX shots.

"This could just be a grand experiment. The shots on Gump were absolutely the hardest I've ever been involved with because of their subtlety. Effects shots with spaceships or where everything blowing apart are easier to do than the kind of really beautiful subtle naturalistic touches that Gump is absolutely riddled with. there's no way to distract an audience to get you past the weakness because everyone is familiar with the natural world. So if there's a problem with how you're presenting it that audience may not understand what's going on but it will feel wrong [to them] -Ken Ralston

Stand-out effects include the opening scene with the feather in Figure 10 where ILM filmed 25 feathers on blue screens and digitally blended together.

Lieutenant Dan's legs had to be removed for most of the film furthermore any objects that the legs would come across and come into contact with would be added in post too.

There were lots of crowd and set extensions done by multiplying crowds around the scene and adding elections as seen in Figure 11.

And digitally altering famous videos adding forest gump into them, for example Gump meets multiple presidents and appears at famous events thought the 1900s

Ultimately Forest Gump is a collection of all the special and visual effects work ILM has made creating shots for the past 20 years that still hold up now 30 years on. It utilises lessons learnt from Willow aswell as paintings and set extensions from Die Hard culminating in some of the best digital composing work produced.

figure 10: Opening Feather Scene

figure 11: Before and After plate taken from Forest Gump and ILM

Nuke's Early Development

Nuke was written in 1993 by Bill Spitzak and Phil Beffrey at Digital Domain, their in house software they used before was a command line script based compositor along with Flame Autodesk's digital compositing tool. Bill started writing the tools for nuke as a script generative program however realised it would be easier to make the program process the image rather than output a script after more work and artist feedback they created the first version of Nuke that used “fixed-sized buffers and a command line. But it could do transforms and blur and sharpen filters and colour corrections, and composites.” - Spitzak 2010. After rapid improvements version 2 a year later released with its iconic GUI and the starts of its 3D systems a card operator

Nukes Creation

figure 12: Screenshot of Nuke Version 1's UI - n.d

Foundry's Foundations

At the same time Simon Robinson and Bruno Nicoletti formed The Foundry, due to their computing background they spent their time making plugins for Flame and Inferno the industry leading software's at the time this allowed their company to be become well known as these plugins were used all across the industry. This foundation proved critical for Nukes success later on.

figure 13: Simon Robinson and Bruno Nicoletti formation of foundry - n.d

Industry Recognised

In 2002 at the Academy Awards for Scientific and Technical Achievement the Nuke development team Won an award "for their pioneering effort on the NUKE-2D Compositing Software.

The Nuke-2D compositing software allows for the creation of complex interactive digital composites using relatively modest computing hardware." - Academy 2002.

figure 14: Academy Logo

IB Keyer

Nukes IBK (Image Based Keyer) was the first keyer to come base with the package. This keyer was developed by Paul Lambert in 2004. While explaining the node Lambert said "putting different shapes onto a blue background or onto a green background and blurring those shapes and getting that color to bleed into the blue. It came from all the bits and pieces that I had learnt about channel differences." This keyer was special because unlike other competitors this one was simple to use, with only a few sliders its goal was to be a foundation were other compositors could expand. IBK works by using 3 inputs, the plate , the background and the clean plate from there it is able to comfortably key almost any shot you throw at it.

figure 15: IBK in use inside of a nuke script

Foundry's Buyout

In 2007 foundry were looking for a software platform to expand and test their plugins in. “We'd all been aware of it for a while, and knew it was highly regarded,” recalls Simon. “It looked like a fun way to expand what we did, and to further our interest in continuing to do ‘more stuff’.” - Simon Robinson n.d. With this acquisition foundry made all their plugins standard and supported with the latest version of Nuke, 4.7. The first major Foundry version of nuke came in the form of 5.0 where python scripting as added and support for EXR images being the flagship features this change opened nuke up to mainstream development as now studios could easily code their own custom plugins with ought having to learn a specialised language.

figure 16: The Foundry Logo

Weta VS ILM ft. Digital Domain, The Foundations for Modern Day Compositing and VFX

Star Wars, The VFX Menance

After finishing the stunning CG work on Jurassic Park, George Lucas felt it was time to revisit Star Wars and begin production on the Prequels. Early on ILM realized that "our image file format and the image processing software we were using were insufficient in terms of the dynamic range they could represent. The difference between the darkest blacks and the brightest whites that could occur in the same image was too small for many real-world scenes." - Kainz F. At the time Rod Bogart was working on a house software that stored images with 16-bit integer samples however leaving a lot of bits unused, they developed this software instead of storing Inigers it stored floating point numbers that could have a wide range and fine resolution improving the quality of the image without increasing the memory. this development was used in the production of Star Wars: A Phantom Menace and later became known as OpenEXR.

OpenEXRs would soon be put to the test when the production of A Phantom Menace kicked into gear. The first major development that came out of this project was a new in-house software called Comptime, developed by Jeffrey Yost on Twister it was praised by compositing supervisor Greg Maloney “Comptime made life easier for the TDs and compositors, If we needed to do a particular function, such as a dissolve, instead of writing lines of code, all we had to do was go to a pull-down menu for the dissolve function.” This development saved time and reduced costs and would be used heavily when creating the Lightsaber effects applying a custom function to a roto giving it its iconic glow.

Another major milestone was the integration of the first CG character Jar Jar Binks. Initially, George Lucas had planned to CG only Jar Jar's Head however after a test from the VFX team it turned out that it would be easier to create Jar Jar as a full CG character from a compositing standpoint this meant that they had to remove Ahmed Best the actor playing Jar Jar from all the shots composite the CG character in and do final effects like adding Jar Jar's shadow over live actors and scenes, this was done with roto shapes lowing the gamma of the shots to give a fake shadow effect.

Lastly, the sci-fi environments were created with a blend of live sets, matte painting, and miniatures needing soft compositing work to make them feel connected and real once all these sets were in place it was the compositor's job to fill the scenes with live-action people's and CG creatures to make the environment feel alive and lived in.

figure 17 : Qui Gon Jin's Lightsaber

figure 18 : Brian Flora (left) and Lou Katz (right) working on an all-digital represention fo Theed

One Studio to rule them all... Weta and Lord of the Rings

Peter Jackson's Lord of the Rings: The Fellowship of the Rings is a testament to great compositing across CG, live-action, and Miniatures. Weta FX was the lead studio along with the use and development of new in-house software to bring the epic scale of Middle Earth to life.

The opening sequence involving the battle at the base of Mount Doom is the perfect example to look at first focusing on Weta FX's in-house software "Massive" developed by Stephen Regelous very early on in the project Massive stands for "Multiple Agent Simulation System in Virtual Environment". in a quote from Weta chief technical officer Jon Labrie "Peter was saying how he’d like to have 50,000, 100,000 people in this battle scene. Steve Regelous had been interested in artificial life for some time; and he thought the best way to give Peter what he wanted was to build intelligent models, or agents, that have smarts— you actually build brains for them." With this newly developed software weta was able to populate the background behind the live plate of actors with near-infinite numbers of CG characters helping set the epic scale of this battle. however, with this amount of numbers the hardware load was about to be massive, in preparation Weta developed their second system a new renderer called Grunt - "Guaranteed Rendering of Unlimited Numbers of Things" designed by Jon Allitt, He explains that grunt only renders what it needs to see however stores where each asset is allowing it to show and hide them when it's needed. this massively cut down the cost and time required to produce these scenes with lots of CG characters. when rendering the crowds were split up into groups ranging from 15,000 - 20,000 taking around 2 to 4 hours each group. Being on individual layers they were able to comp atmospherics between the groups giving a believable sense of scale and depth.

The next large task was tackling the issues of Hobbits being supposedly 3 feet and showing that on set this was achieved practically and digitally when called for. Digitally weta would film an actor on a blue screen digitally remove it and scale the camera up or down before layering them back into the scene. however, a lot of the shots were done using forced perspective as well in the environment, giving the hobbits oversized props and using short actors when their faces weren't visible.

When Frodo puts on the ring and enters the wraith world Peter Jackson describes it as a "Twilight Vision" and a "high-frequency, turbulent look where the edges of things are tattered and frayed" with similarities to heat distortion. 2D Lead Charles Tait and 3D Lead Colin Doncaster came up with the idea of using 3D particles emitted from the camera to drive a smear effect inside of a software called IMX. John Nugent explained, "We left Elijah Wood’s face untouched to create the impression that the disturbed images are what Frodo is seeing, and that we are in the wraithworld with him.” Taking the smeared plate into shake it gave a 3D effect that would smear from the back forward helping add to the unnerving feeling.

Let's look at the flyover shot of Isengard composited by Mark Tait Lewis. This shot seamlessly blends miniatures, live action, and CG to create the tower sequence. due to limitations on set, they couldn't capture the full camera movement up the tower, instead, they first filmed as much as they could using a 1/35th scale set before blending over to a CG scene for the last stretch of the tower and then matching up with the live plate of the top of the tower with Gandalf on this was already impressive enough however at the same time they composited in CG Orcs, animated and comped a CG moth for Gandalf to catch and comped atmospherics like smoke and fires to bring this fortress to life.

figure 19 : showing 100.000 CG characters in a shot using Massive and Grunt

figure 20 : Showing live elements agianst the final shot

figure 21: scene of Frodo in the Wraithworld smear effect

figure 22: shot of Isengard flyover with smoke birds and orcs

Tentacular Effects and Keeping It Real

Pirates of the Caribbean: Dead Mans's Chest produced arguably the first perfect VFX in the form of Davy Jones this staple could only have been completed due to the careful planning of VFX supervisor John Knoll along side clever compositing lessons learned from the success of these shots

At its core, the lesson is to keep as much as you can real. when making Davy Jones' face compositors made sure to keep Bill Nighy's eye performance, On set he was dressed up in eye and mouth makeup and in a mocap suit, later on in the compositing phase of the project his eyes and mouth were used as much as possible in combination with the digital makeup effects to give him the tentacles. Another example of ILM's lesson is their approach to water effects, whenever actors would interact with water compositors tried to keep as much of the real splashes and wake as possible before adding simulated water effects over the top, one of the biggest things that Compositors can take away from the VFX of this film to try and preserve as much of the original plate as possible.

figure 23: (left) live plate of Bill Nighy (RIght) Final shot after CG makeup

Transformers, More VFX than meets the eye.

In 2007 ILM was tasked with the production of Transformers with new technologies like ray tracing having just been used ILM felt it was the perfect time to showcase photorealistic robots.

The first major milestone in this project was the decision to mix Ray Tracing, a new way to render light reflections and bounces that is also very hardware intensive, alongside manually creating the reflections through compositing. The larger more important parts of the models would be rendered with ray tracing, and then the team at ILM would manually draw on the reflections on the rest of the objects. At the same time, compositors drew on mico details like scratches and grunge helping make the robots feel more photorealistic.

In true Micheal Bay fashion, a lot of the footage was filmed with live-action smoke explosions and dirt this led to issues later on in production requiring a monumental amount of rotoscoping and layering 2D assets to blend the CG back into the dirty plate. VFX Supervisor Scott Farrar would go on to say "The first thing Michael Bay said when we started this, I want to do things real and dirty. Can we blow things up and have real dust and debris on set, with lots of lights and flaring and bling?" He later explains that there wasn't much pushback as "that’s just how movies are made now" This decision would make things more difficult for the competitors and roto/pain crew though some would argue that the practical effects are what made the film so believable.

Another large task was compositing the destruction the robots caused every time a robot interacted with the world in a fight they would crush and smash buildings and floors. ILM used a mix of miniatures, simulations, and 2D assets to help add interactivity to the scenes. "As the show progressed, ILM modelers built a library of debris that could be parented to the simulated explosions to make them look as photorealistic as those orchestrated on the set by John Frazier." some shots called for miniatures of skyscrapers to be built so they could get shots of Transformers crashing through buildings, even these were digitally altered adding extra debris and cleaning up wires.

figure 24: Comparison of Final Shot (Top) and the Live Plate (Bottom) showing use of pratcile effects and accurate reflections

figure 25: Minature set used for destruction shots

Nuke's break into the mainstream pipeline.

In the lead-up to the 2010s nuke was famously used by The Orphanage to create the HUD for Iron Man 1 (2008), "We realised that we need to see it [The HUD] on a curved surface and the 3D comping program we were using to do that just wasn't capable of doing it" - "the only thing I could think of was nuke" - Dav Rauch, HUD Supervisor, and Designer. Inside of nuke the Hud elements, that were made in illustrator and nuke, were attached to a curved surface and animated in 3D. Nuke also made it easy to create the reflection in RDJ's eyes, using matched eye geo Dav was able to project the hud onto them to get accurate reflections.

Nuke's largest development, which propelled it into becoming the industry standard software, is undoubtedly the development of deep compositing (2011). "Deep images contain multiple samples per pixel at varying depths and each sample contains per-pixel information such as color, opacity, and camera-relative depth." - Foundry, using this data in Nuke you can quickly composite multiple assets without needing to worry about the merge order of the scene, furthermore if assets interact with each other passing back and forth deep compositing will sort out what's on top of what automatically. Ultimately deep compositing smoothed out the management and efficiency of scenes that use a lot of 3D assets making it the standard for industry compositing from then on.

During the production of The Hobbit: Desolation of Smaug (2013) while talking about the usefulness of deep compositing Dr Peter Hillman, A senior software developer at Weta FX said deep compositing is the “standard way we do absolutely everything – I don’t know how hot to turn off deep data in the pipeline,”

In 2016 nuke released its smart vector tool kit. "The Smart Vector toolset allows you to work on one frame in a sequence and then use motion vector information to accurately propagate work throughout the rest of the sequence." - Foundry. This development was substation because you only need to render the motion vector once for long shots this technique is invaluable, it works by using motion vectors rendered out of 3D software meaning the vector displacement will be perfect

Nuke also ensures that for every feature they change, they keep the old node inside the software, allowing comps to always work no matter what version of Nuke you are on. Furthermore, you can access these old versions of nodes if you prefer to use them over the new ones.

figure 26: Screen capture from plant of the apes nuke script

Competitors

Da Vinci

Da Vinci Resolve, made by Blackmagic Design claims to be "the world’s only solution that combines editing, color correction, visual effects, motion graphics, and audio post-production all in one software tool". One of Da Vinci's major selling points is its pricing, it is free to use up to 4k footage and the paid version of the software is a 1-time license. It's not ready to replace Nuke for compositing however it has already replaced software like Premier Pro, Resolve looks to be a competitive software heading into the future however it appears to be expanding its editing software over any heavy compositing work.

After Effects

After Effects excels at beginner VFX. Its largest appeal is that it is easy to learn and understand, making it popular with beginners. However, attempting to use a modern compositing workflow with Multi-Layer EXRs quickly realizes that it falls behind the likes of Nuke. In media, After Effects is mainly used for Motion Graphics as at its core it is a 2.5D animation software however this means it cannot compete with other compositing packages when it comes to live-action works or 3D composting.

Flame

Flame was an Industry standard softwear before nuke came around it excelled in producing fast composites and ran extremely efficiently making it perfect for client meetings, however as hardware got better and m.2 drives were released the speed became a niche rather than a selling point this left open a gap in the market for a compositor that ran well and could handle heavy effects, researching this era of flame was extremely difficult this could be a further indication of how quickly nuke surpassed it. Online users claim it is very quick however it struggles with complex shots making it potentially an option for advertisements and client meetings.

How does Nuke keep ahead of its competitors?

Large studios dictate what is industry standard and Nuke has the blessing of being chosen to be standard, even though Nuke has earned this title it will be almost impossible for another software package to rise up and dethrone it simply because the cost for the studios to retrain all their staff with new software is simply too great the competitor must be so much greater than nuke it makes up for the months of training to get back to the level of skill and detail that current Nuke artists have. This means that the foundry doesn't have a lot of pressure to innovate rapidly meaning they can spend more time developing and researching what features would be the best to add.

Inside the nuke license foundry, other software is offered, like Hiero, an excellent tool for managing multiple shots in a team. This tool is also used across many VFX houses to manage pipelines and give daily feedback.

Regarding innovations Nuke recently has started looking at developing its machine learning toolset for example the CopyCat node is designed to try and automatically complete repetitive tasks like rotoscoping, for example, you can remove a label on the first and last frame then the copycat node after given time will remove the label on all the middle frames. In concept, this is a great addition in principle however it relies heavily on the GPU of a PC, furthermore for complex shots it takes a long time to develop its database some compositors argue that for complex shots it's quicker to do by hand. on the other side for short and simple cases where it takes a short time to create databases, Compositors argue that it is still quicker to do by hand. Right now Copycat offers a glimpse into what the future might look like however in its current state it has yet to be fully embraced by the industry as a whole.

What does the future of Compositing look like?

Machine learning across all industries is rising in popularity for its ability to automate repetitive tasks, starting with Deepfake technology and expanding into copycat. With Nuke expanding on the copycat node we can expect to see major improvements with rotoscoping and painting, edge mattes will be made easier to set up however I can't imagine it will be able to roto around thin hairs. Machine learning will reduce the amount of outsourcing that studios must do.

After talking with Luke Warpus he mentioned that Untold Studios uses ComfyAI to generate depth passes and normal maps. furthermore listening to Alfie Vaughan who works as a 2D artist at The Mill and as a VFX Artist at Coffee and TV, talk about a day in the life of a VFX artist he mentioned that they use AI to create concept images and light previs they do this so they don't have to spend 3 days getting someone to make something. As AI rapidly develops it is an inevitability that it will be adopted by the industry, for example, the 2024 Coke advert was made using AI, and after the legal issues have been smoothed out we can expect to see AI being used to help guide compositors instead of replace them.

Davinci Resolves Fusion appears to be leading the charge when it comes to merging software however it lacks the compositing skills necessary to make it industry standard, in the future we might be able see davinci offer deep compositng support or a better base toolkit.